The Short Story

A client had a need to protect files and information against data leak. Being an Office 365 tenant also, the obvious choice was Azure RMS.

I implemented Azure RMS in conjunction with File Classification Infrastructure. It came together nicely, however during testing it became evident that Microsoft still has a long way to go to deliver a dependable solution.

I found that RMS in combination with FCI is unreliable and exposes unprotected information to the risk of being leaked.

The Flaw

A deadly combination of PowerShell scripts and uncorrelated tasks leads to failures due to file sharing violations, and ultimately to unprotected files and potential data leak.

While my implementation used Azure RMS, I dare say that a full-blown on-premise AD RMS solution would be plagued by the same flaw.

The Nitty-Gritty

The official Microsoft implementation document is available at https://docs.microsoft.com/en-us/information-protection/rms-client/configure-fci. Says the article (highlights from me):

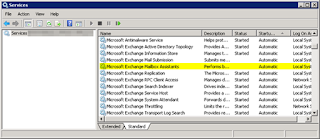

I did just that. Then I started testing. I set the schedule for the task (remember, "continuous operation" was also enabled).

The first thing that I noticed was that some of my test files remained unprotected after the task ran. In the Application event log I noticed the following error:

Tried the easy way out, checking the HTML report:

Error: The command returned a non-zero exit code.

Useless as a windshield wiper on a submarine.

Every time the scheduled RMS script ran, I got random files being listed as failing. Most weird...

NOTE: Before anyone starts to agitate, I want to note that I dropped a copy of the RMS-Protect-FCI.ps1 file in the test folder. This is NOT the copy that's being run to protect files, but just a copy of it for testing generic protection.

I checked the log mentioned in the event. The result: same as above (useless):

More digging reveals that additional logs are created in the same folder as the one above. I noticed that the log in the screenshot (see further below) correlates to the one above in that it has been created roughly 5 minutes after the above log - consistently approx. 5 minutes delay during my tests (your tests may deem different delays depending on server performance but it should be consistent between test runs).

In the log I was getting the following:

Item Message="The properties in the file were modified during this classification session so they will not be updated. Another attempt to update the properties will be made during the next classification session."

and

Rule="Check File State Before Saving Properties"

These are different files, but nevertheless I had them in the same test folder and they correlate with previous events. We are getting somewhere but not quite there yet. The information is insufficient to draw any conclusions.

For the record, log locations are configured as follows:

Then I tried to manually protect a file. It produced a file corruption error:

Protect-RMSFile : Error protecting Shorter.doc with error: The file or directory is corrupted and unreadable. HRESULT: 0x80070570

I instantly kicked off CHKDSK, but it came back clean. I tried to open the file in question - successful. Apparently there was no corruption, I could open the file, yet RMS failed due to file or folder corruption. Go figure.

Then I wanted to see what the file protection status was in general. I ran the following command as documented at https://docs.microsoft.com/en-us/information-protection/rms-client/configure-fci:

foreach ($file in (Get-ChildItem -Path C:\RMSTest -Force | where {!$_.PSIsContainer})) {Get-RMSFileStatus -f $file.PSPath}

To my surprise, I got a file access conflict error:

Get-RMSFileStatus : The process cannot access the file because it is being used by another process. HRESULT: 0x80070020

I ran this command numerous times during my tests, but I've never seen this error before. A subsequent run of the same command came back clean. Is it backup? Is it the antivirus? It was in the middle of the night so no-one was accessing it except my script. I was puzzled...

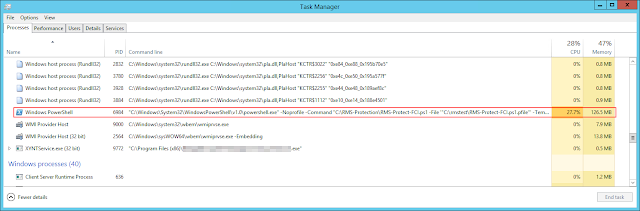

A bit later, I was watching Task Manager while the scheduled task was in progress, with "continuous operation" also enabled. I noticed that I had two RMS PowerShell tasks running concurrently:

One of them disappeared as soon as the scheduled task completed, while the one spawned by what I thinks is "continuous operation", remained. This may explain the random sharing violations and errors in the logs above.

I also noticed that while new files that I created via Word or Excel were protected almost instantly as a result of having continuous operation enabled, files that I copied from elsewhere have not been protected neither by the "continuous operation" task nor by the scheduled task.

I also want to point out that the PowerShell script can be scheduled no faster than once a day. Therefore if it fails to protect a file, then there is at least a 24-hour window until it runs again, during which unprotected files can be leaked.

As I mentioned it before, I've been watching Task Manager. I noticed that the RMS PowerShell script took up significant processing power. The official Microsoft documentation at RMS protection with Windows Server File Classification Infrastructure (FCI) (also linked above) states that the script is run against every file, every time:

Knowing the effects an iterative file scan has on disk performance, I kicked off a Perfmon session that shows CPU utilization and % Disk Time, just to check. Then, while watching it, I turned off "continuous operation" in File Server Resource Manager:

The effect was staggering: the CPU almost instantly took a holiday and the disk too was much happier:

Putting the puzzle together:

- The File Server Resource Manager runs a scheduled task and a continuous task concurrently.

- I am getting random files failing to be protected (see SRMREPORTS error event 960 and associated logs above)

- I am getting random sharing violation errors.

- I had one corruption report, although I cannot be sure it was the result of my setup.

- I was expecting that the "continuous operation" mode is event-driven (it is triggered only when a new file is dropped in the folder). What Task Manager indicates though is that it is an iterative task in an endless loop that constantly scans the disk. This seems to be corroborated by Perfmon data.

- The "continuous operation" PowerShell task is a resource hog.

Choices, Choices...

It looks like having "continuous operation" enabled for instant protection of new files works okay. Therefore you would want it turned on to ensure that chances of leaking sensitive data are minimised. However, as I have observed, it conflicts with the scheduled file protection task, leaving random files unprotected.

On the other hand, I also observed that if I turn off continuous operation, then, besides the fact that the system breathes a lot easier, the scheduled task protects files more reliably. On the downside, it leaves a significant time window during which files aren't protected and thus sensitive information can be compromised.

In Conclusion

My observations lead me to the conclusion that when using PowerShell scripts to RMS-protect files, the File Server Resource Manager fails to coordinate scheduled and continuous operation tasks and scripts, and thus it results in random sharing violations and ultimately unprotected files. As a result, the primary purpose of RMS, that of protecting files, falls flat on its face, giving the unsuspecting or malicious user plenty of time to leak potentially sensitive data.

Additionally, the way PowerShell scans the file system and (re)protects files, results in significant performance degradation even on lightly utilised systems.

While RMS is a great piece of technology that mitigates the issue of leaking sensitive data, it seems that it has a long way ahead to become a dependable tool when it comes to integrating it with File Classification Infrastructure.

PS: All this is based on observation and trial and error, and hence it may be incorrect in some respects. Information is scarce out there. I welcome any comments, suggestions and pointers to additional information.